Introduction

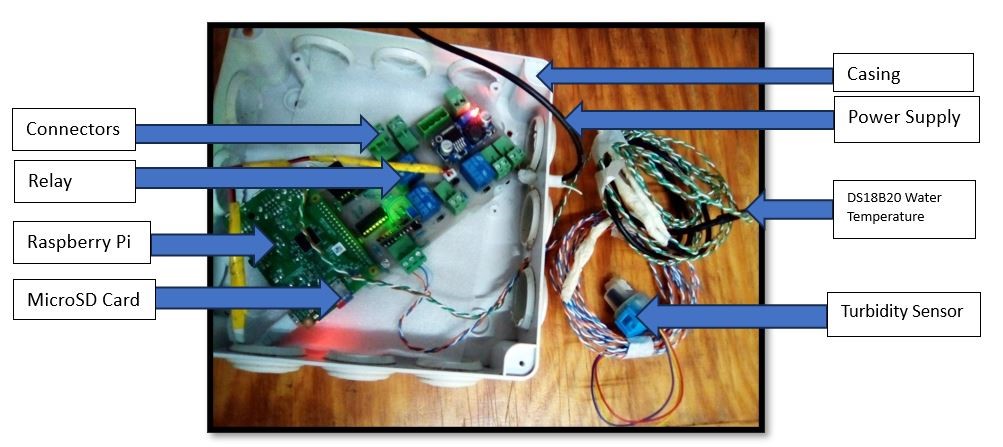

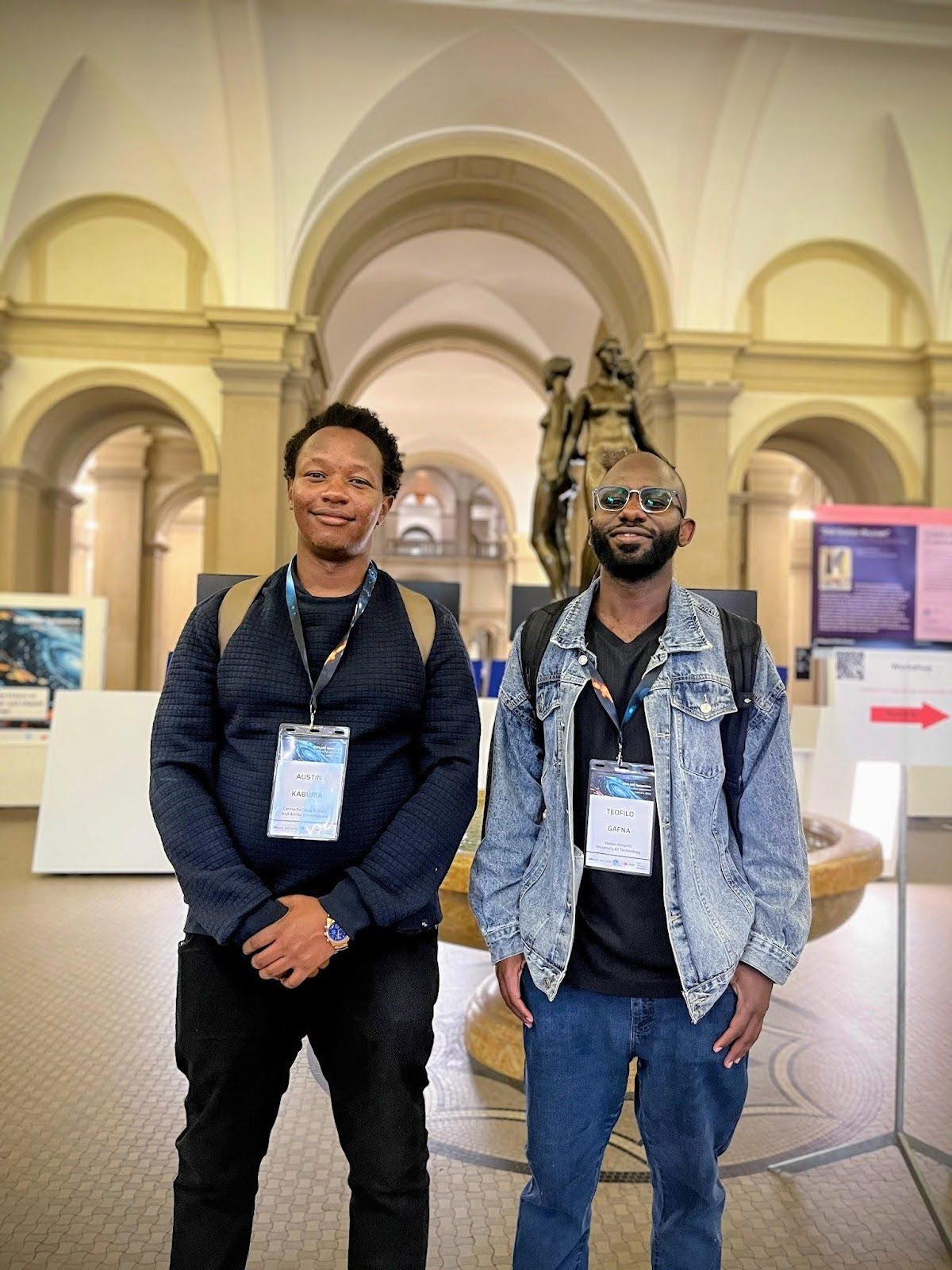

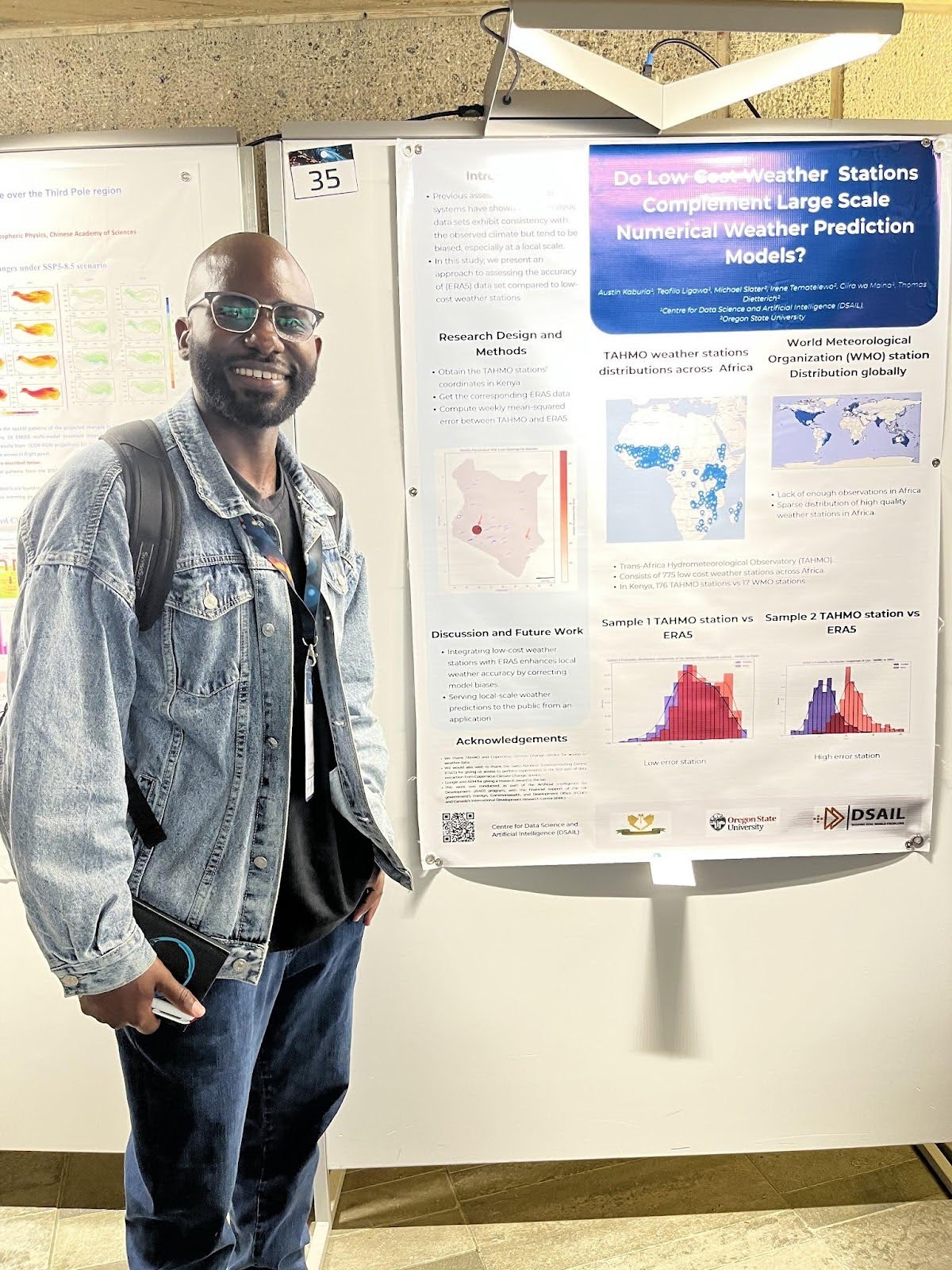

Between 2 - 4 June 2025, Austin and I had the privilege of attending the 2025 EXCLAIM Symposium that was held at ETH Zürich, Switzerland. This conference focused on the intersection of AI and climate science. The symposium brought together researchers, data scientists, climate modelers, and technologists all working toward one goal: making Earth system science more accurate, equitable, and actionable using artificial intelligence. We also had the privilege of visiting the Swiss National Supercomputing Centre (CSCS) offices in Lugano, where we toured the machine room and learnt how the machines work from the server side and how the supercomputers are maintained—including their innovative lake water cooling system.

This blog post captures some of the most insightful themes, key models, and reflections from my time at the conference.

AI for Climate: From Global Forecasts to Localised Impact

A major theme at EXCLAIM was the transition from global-scale modelling to localised forecasting. Several discussions shed light on downscaling global predictions—transforming coarse-resolution data into high-resolution forecasts for actionable use.

For instance, let us assume that the data we have is a reanalysis dataset like ERA5, (a climate reanalysis dataset produced by ECMWF, offering historical atmospheric data based on both observations and model outputs), which uses a 0.25° 0.25° grid (i.e., one pixel = 0.25 degrees latitude x 0.25 degrees longitude).

In this example, 0.25 degrees near the equator translates to roughly around 28 km on the surface; downscaling here implies that we can have forecasts with spatial resolutions that go as close as possible to 0.01 degrees, which is roughly 1.11 km.

This is crucial for capturing small-scale weather events like local rainfall or thunderstorms, which are often smoothed out in global models.

I learnt that diffusion models and UNet architectures are now being used for spatial super-resolution of weather fields. These models iteratively denoise random input (or coarse inputs) to match the true high-res target fields, guided by physics-informed learning.

Aurora: A Transformer-Only Weather Model

Microsoft’s Aurora, introduced at EXCLAIM 2025, is a cutting-edge AI weather model featuring a 1.3-billion-parameter transformer architecture (a combination of Perceiver and 3D Swin Transformer). It is trained on multimodal datasets, including:- ERA5

- GFS (Global Forecast System): A global numerical weather prediction model developed by the U.S. National Weather Service, known for providing short- to medium-range forecasts.

- IFS (Integrated Forecasting System): ECMWF’s flagship operational model that powers some of the most accurate medium-range weather forecasts in the world.

- It uses transformers exclusively (no GNNs), including a 3D Swin Transformer core for spatial-temporal modelling.

- Aurora is trained on a multi-source dataset including reanalyses, forecasts, and observations.

- Fine-tuning strategies (e.g., LoRA) helped extend lead times and accuracy.

Hybrid Models: GNNs, Transformers, Physics, and DOP

While Aurora uses transformers only, models like AIFS (from ECMWF) and NeuralGCM (from Google) offer hybrid architectures:

- NeuralGCM blends traditional physical models with neural surrogates, learning corrections to fluid dynamics.

- AIFS combines graph neural networks for encoding/decoding with transformers for temporal rollout—showing strong performance across variables and lead times.

Another major highlight from ECMWF was GraphDOP, which uses only observations—satellite & in-situ, with no physics reanalysis inputs—to train a GNN encoder/decoder and transformer-based processor. It learns latent Earth-system dynamics and produces 5-day forecasts directly from raw observations. Though still catching up to full NWP systems, some of GraphDOP’s key features include:

- End-to-end data-driven (no assimilation or physical priors)

- Latent space prediction from real-world sensors

- Extendable to probabilistic versions using diffusion and proper scoring

Downscaling, Uncertainty & Ensembles

Another major track I followed was downscaling, especially:

- Statistical downscalingusing ConvLSTMs and vision transformers.

- Super-resolution with diffusion models.

- Bias correction using observed datasets (ground truth).

- Deterministic models (like Aurora’s single prediction) vs.

- Probabilistic forecasts(multiple ensemble members capturing different plausible futures).

Insights for My Own Work

As someone working on AI for Weather forecasting in Africa, the symposium helped me:

- Rethink the data pipeline for local high resolution targets.

- Rethink on how to calibrate models using observed weather station data.

- Explore ensemble weighting and probabilistic outputs for better actionable insight.

Final Thoughts

EXCLAIM 2025 was more than a conference—it was a glimpse into the future of climate-informed decision-making powered by AI. It showcased that no single model is enough; instead, we need a collaborative ecosystem combining:

EXCLAIM 2025 was more than a conference—it was a glimpse into the future of climate-informed decision-making powered by AI. It showcased that no single model is enough; instead, we need a collaborative ecosystem combining:

- Global physics and local observations

- ML flexibility and domain interpretability

- Open data and reproducible pipelines