Introduction

Computer vision is one of the main fields in Deep Learning and AI applications. There has been a lot of work and research done in computer vision in the recent past and this has seen its applications in various systems be it in object detection and classification (Tesla's self-driving AI), image segmentation, face recognition (Facebook's DeepFace), Human Pose Estimation, among others.

In this paper, computer vision with machine learning is applied in real-time human pose estimation and monitoring so as to be able to render the human gait from a real-time video stream captured by a camera and additionally be able to obtain the flexion angle between any of the major joints of the human frame be it the knee, hip or the elbow.

The main aim of this research is to help orthopedic specialists to know the extent of injury or recovery of patients with joint and ligament impairments with high accuracy and compare readings from the model developed to readings obtained from a wearable knee flexion device utilizing a flex sensor such that there are two metrics of comparison between the values obtained by the model and the values obtained by the wearable device in real-time.

At the Centre for Data Science and Artificial Intelligence (DSAIL), Dedan Kimathi University of Technology, we have developed an NVIDIA Jetson Nano based system that is capable of processing real-time webcam video input and determining the angle of flexion at the knee or arm joints while comparing the same angle measurement that obtained by a wearable flexion angle measurement device running on the same Jetson Nano.

Currently, surgeons use Goniometers and estimated passive observation to obtain the knee joint's postoperative Range of Motion (ROM) [1]. Goniometers have shortcomings among them being highly prone to human errors and being more invasive as too much contact is needed with the patient. Other modern technologies used to conduct gait analysis and joint flexion measurements are expensive. For example, ProtoKinetics, which is based in the US, have developed a Gait Analysis Software and have patented the Zeno Walkway Gait Analysis System [2] capable of providing meaningful data to clinicians and researchers, although at an expensive fee due to the cost of development of their system and integration of expensively acquired pressure and precision camera sensors. However, such solutions are hard to access especially for specialists and patients in low and middle-income countries (LMICs). As such, a need arises to develop effective but cost-friendly and less invasive solutions that can accurately measure the joint flexion angle by utilizing modern technologies like AI.

In this research, we develop two methods of approximating the knee flexion angles and then conduct a comparative analysis of the two implementations with the aim of validating their performance. The wearable device estimates knee flexion angle using an Arduino Nano 33 BLE Sense and a flex sensor powered by a 3.7V LiPo battery [3]. The device is connected to the Jetson Nano via Bluetooth Low Energy (BLE). The measured angle is recorded in a real-time database, InfluxDB, from which we read and display the angles in a gauge plot hosted on a dash application. The second implementation involves utilizing a machine learning framework called MediaPipe that is optimized for real-time tracking of the human pose and features. The human pose model takes a live video stream as the input data from a webcam and then identifies 32 mappable joints of the human frame. The model then appends a skeletal structure to the mapped joints and tracks the position of each joint on each subsequent frame of the input video stream. This enables us to derive the desired flexion angle between the targeted knee joint for a patient in motion within the range of view of the webcam's image.

The Main Objectives

- Developing the hardware and software of a wearable device for approximating knee joint flexion angle and designing a computer vision based human pose model to simultaneously estimate the knee joint flexion angles from live video input.

- Implementing the two methodologies to run on Nvidia's Jetson Nano for ease of deployment to remote areas. Drawing a comparison between the knee joint angles approximated by the wearable device versus those estimated by the human pose model.

The Implementation

This measurement of the knee flexion angle is implemented in two ways, one is the human pose model which extracts the flexion angle utilizing computer vision and the other is the wearable device which measures the knee's flexion angle using a flex sensor attached to a wearable knee brace.

The Human Pose Model

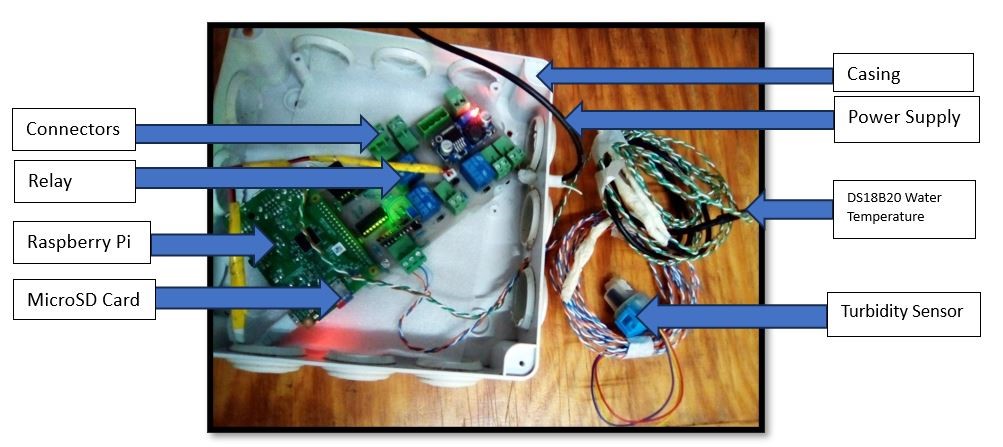

The Human pose model consists of the Jetson Nano as our primary microcontroller, running the human pose model algorithm, and the webcam which takes in the video input for processing so as to extract the knee flexion angle. With a human subject in the field of view of the webcam, the model is able to superimpose a skeleton over the estimated human frame based on various landmarks of identifiable human features. The model then identifies the targeted knee joint whose flexion angle we want to determine and consequently determines the knee joint flexion angle based on the position of the hip and the ankle relative to the x and y axes of the frame. Figure 1 shows how the hardware and software interact to measure the knee flexion angle.

Figure 1: Architecture of the human pose model

The hardware consists of a webcam and the Nvidia Jetson Nano. The webcam is connected to the Jetson Nano to record a live video stream of the patient under observation hence providing the input data for our model. The Jetson Nano is a small but powerful computer that runs the human pose model which determines the knee flexion angle. It runs the algorithm's pipeline and gives an output video stream with the measured flexion angle at a frame rate of 10-15 fps with a mild latency from the time the real-time video stream is received to when the flexion angle is displayed and stored in the InfluxDB database.

i. The Nvidia Jetson Nano

The Jetson Nano Development Kit is a preferable choice for this implementation as it is an affordable and accessible microcontroller that is capable of carrying out machine learning tasks while also being capable of running deep learning and AI applications. This is made possible by the fact that it integrates GPU cores (128 CUDA cores) in its processing architecture. GPU cores are better in terms of running multi-dimensional parallel processing which is crucial for machine learning applications and batch processing of real-time image data

Figure 1: The Jetson Nano

ii. Webcam

The USB webcam provides a means for our computer vision based human pose model to obtain real-time video stream input for processing such that it is able to superimpose a skeleton frame over the human subject in the webcam's field of view and concurently determine the angle of flexion at the knee joint.

Figure 2: The Webcam

Software Description

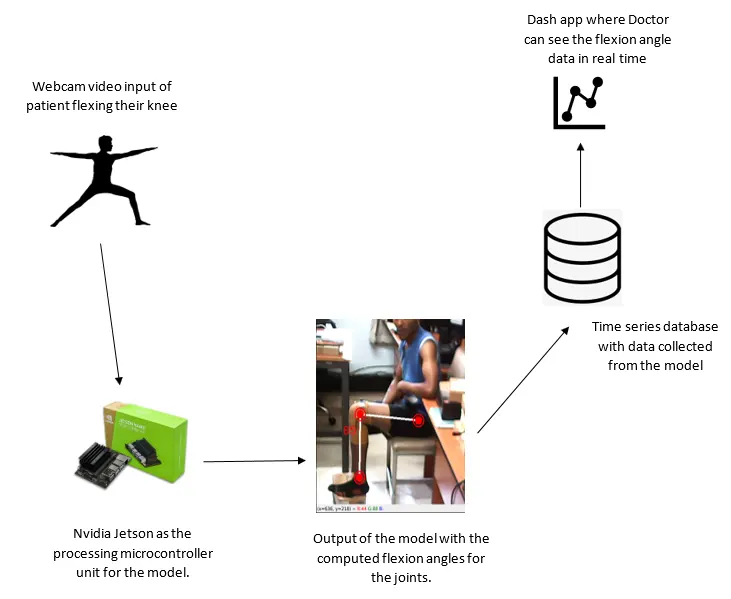

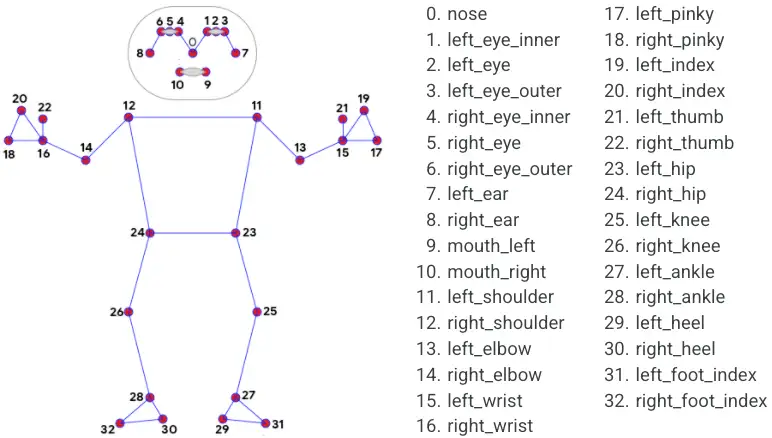

The human pose machine learning solution utilizes a two-step detector-tracker machine learning pipeline which has proven to be effective in accurately tracking the human pose. This human pose model is based on MediaPipe, a cross-platform and open-source machine learning framework to perform computer vision perception pipelines over live video streams, images, or stream media.The model first locates the person's region of interest (ROI) within the input image frame. Then the human pose detector part of the pipeline predicts the location of 33 pose landmarks of the human frame, as shown in figure 6, and the segmentation mask within the detected ROI. The model was trained on a COCO dataset [14], a large-scale object detection dataset that is optimized for detecting human beings and localization of a person's key features in real-time.

Figure 3: The human pose model landmarks to be captured.

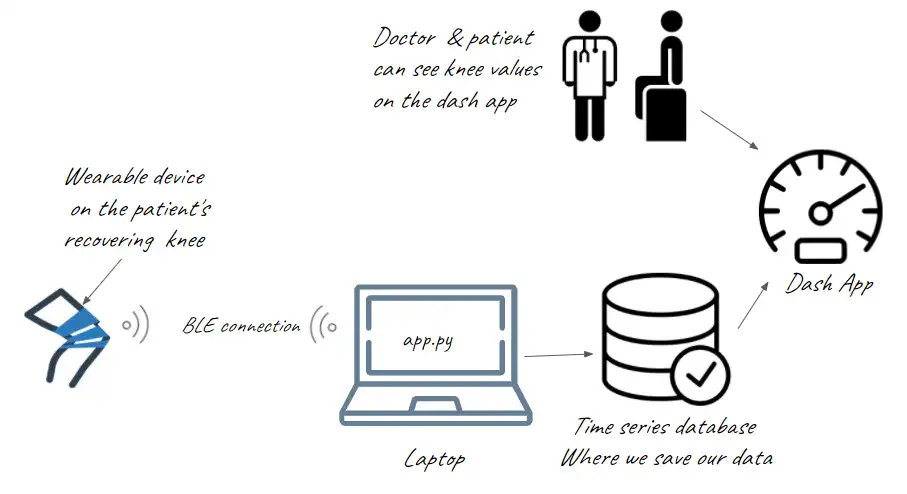

The Wearable Device

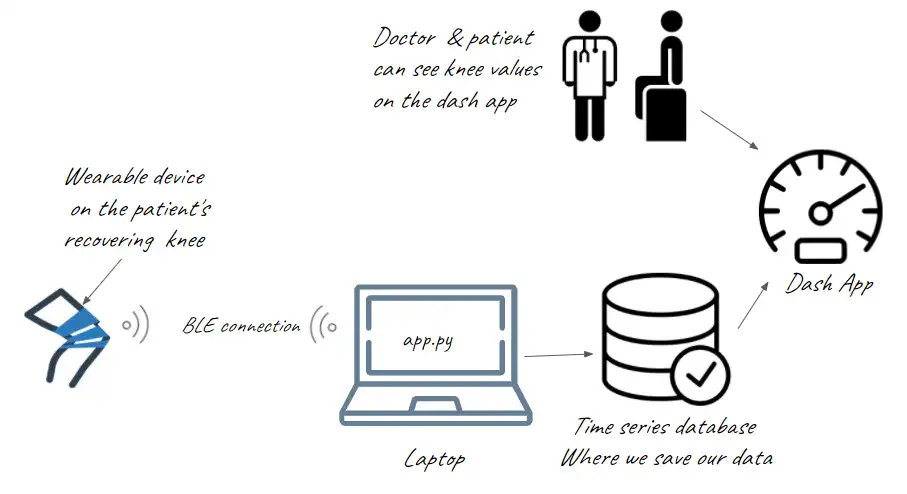

The wearable device estimates knee flexion angle using the Arduino Nano BLE Sense and a flex sensor powered by a 3.7V LiPo battery. The BLE sense and the flex sensor are attached to a wearble knee brace to capture the flexion angle from extenstion to when the knee joint is fully flexed. The device is connected to the Jetson Nano via Bluetooth Low Energy (BLE) signal. The measured angle is recorded in a real-time database, InfluxDB, from which we read and display the angles in a gauge plot hosted on a dash application.

Figure 4: The wearable device implementation architecture.

Hardware Description

i. Arduino Nano BLE Sense.

The Arduino Nano BLE sense is our choice of microcontroller for the independent wearble device because it combines a tiny form factor with an assortment of different environmental sensors and the possibility to run various AI implementations on a generally smaller footprint. The BLE sense is attached to the wearable knee flexion measuring device and takes an input of flexion angle from the flex sensor which is sent to an online database via the Jetson Nano.

Figure 5: The Arduino Nano BLE Sense

Software Description

The software is made up of three code components; an Arduino code and two python scripts. One that writes the measured flexion angle to a time series database, InfluxDB, and another that reads the flexion angles from InfluxDB. The Arduino code processes data from the flex sensor and transmits the flexion angle of the patient's knee. The writing script stores the transmitted angle in InfluxDB, a time series database while the reading script queries the flexion angles from the database and displays them on a real-time gauge plot.

Figure 6: System architecture of the wearable device

Results of Deployment

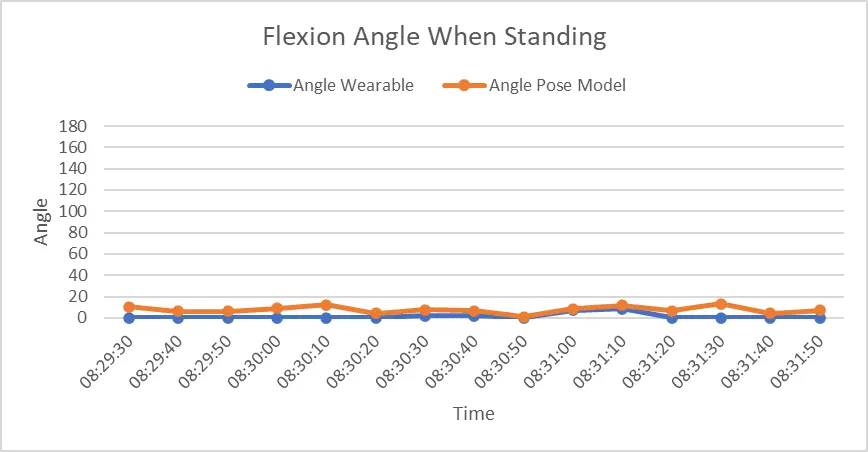

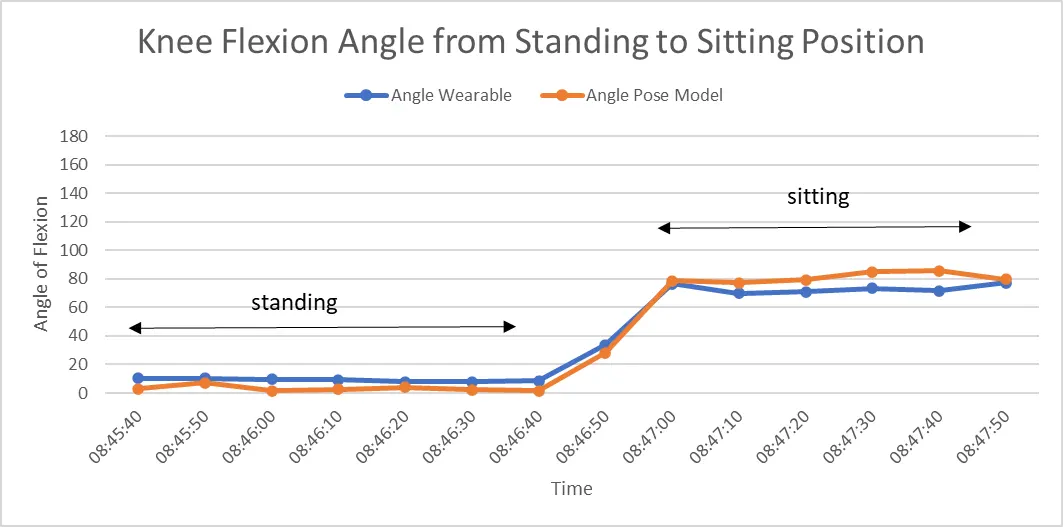

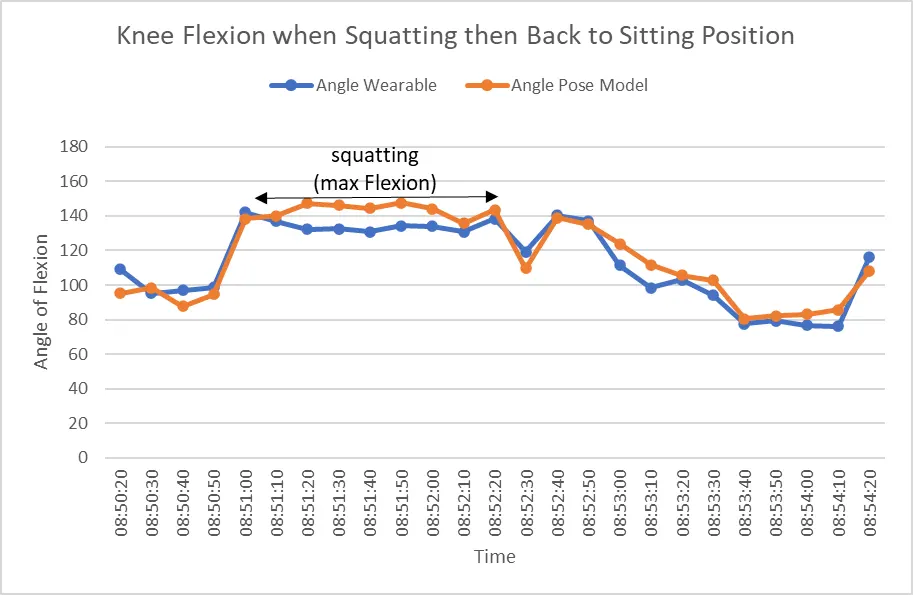

The two implementations were put to the test and the flexion angles obtained from the two methodologies were compared as shown in figures 7, 8 and 9. The knee brace embedded with the wearable device is worn by a subject and at the same time the subject positions himself within the webcam's range of view to measure the knee flexion angle simultaneously using both implementations. We focused on three main positions of knee flexion; standing, seating, and squatting. The knee flexion angles obtained by both implementations are then logged in real-time in an online database, InfluxDB for comparison. Additionally, the implementations included local storage of flexion data in a CSV file which was then imported to a Jupyter Notebook for further analysis.

Figure 7: Flexion angle when standing.

Figure 8: Flexion Angle from standing to sitting.

Figure 9: Flexion angles when in dynamic motion

Conclusion

The results above show a direct comparison between the knee flexion angles estimated by the wearable device and the human pose model. Fig 7 is a graph showing the comparison of the two readings while the subject is standing upright. As expected there is near zero flexion from both readings because flexion of the joint is zero when the leg is fully extended. Fig 8 shows the results of the transition from a standing position to a sitting position. When seated the expected angle of knee flexion is around 80° - 90° and when squatting the expected angle of flexion is about 130° - 140°. The final graph Fig 10 shows the comparison across the various positions tested on by both the wearable device and the human pose model. The slight deviations observed can be attributed to approximation errors by the implementations and inherent noise particularly with the human pose algorithm which continuously processes a real time input video data stream on the Jetson and determines the human pose repeatedly based on the number of input frames per second.

Further Optimization

Further optimization can be done on the implementation of a human pose model for angle measurement and the implementation of a wearable device for joint angle approximation. In this project the MediaPipe machine learning framework is used while there exists posibilities to use other frameworks like Nvidia TRT pose framework and the OpenPose machine learning framework. As for the wearable device measuring the flexion angle using the flex sensor we could also get the flexion angle using IMU sensors which use a combination of gyroscope, accelerometer and magnetometer to extract the relative angle between two reference points for more accurate angle measurement.

References

[1] D'Lima DD, Fregly BJ, Patil S, Steklov N, Colwell CW Jr. Knee joint forces: prediction, measurement, and significance. Proc Inst Mech Eng H. 2012 Feb;226(2):95-102. DOI: 10.1177/0954411911433372. PMID: 22468461; PMCID: PMC3324308.https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3324308/

[2]Gait analysis software and assessment systems " Protokinetics (2022) ProtoKinetics. Available at: https://www.protokinetics.com/ (Accessed: October 14, 2022).

[3](2022) MediaPipe Pose. Google LLC. Available at: https://google.github.io/mediapipe/solutions/pose.html (Accessed: October 4, 2022).

[4]Allan, A. (2018) Introducing the Nvidia jetson nano, Hackster.io. Hackster.io. Available at:https://www.hackster.io/news/introducing-the-nvidia-jetson-nano-aaa9738ef3ff (Accessed: October 17, 2022).